Introduction to Decision Tree

In this post, I will discuss about Decision Tree, which is the fundamental algorithm of the well-known Tree-based algorithms. This post will also address two metrics that are employed in Decision tree and many other machine learning algorithms, even in deep learning.

1. Metric

Why do we need the “Metric” in tree-based algorithm?

The answer will be to find the best splits in the tree. We use the metric, calculate a variable, measure the split score with the value, and choose the value for the best split at each step of finding the split or the predictors in the tree. Many other metrics or score functions are used to find the “best” split for different tree-based algorithms. Two metrics will be discussed, which are Gini Impurity (or Gini Index) and Information Gain with Entropy.

1.1 Gini Index

Suppose we have a dependent categorical variable, which have $\mathcal{J}$ classes. Let $i \in \{1,2,…,\mathcal{J}\}$ and $p_i$ is the probability given each factor levels of dependent variable.d

Then, the Gini Index, or Gini Impurity is defined as below,

$$I_{\mathcal{G}}(p) = \sum_{i=1}^{J}p_i \sum_{k \neq i}p_k = \sum_{i=1}^{J}p_i(1-p_i) = \sum_{i=1}^{J}(p_i-p_i^2) = \sum_{i=1}^{J}p_i - \sum_{i=1}^{J}p_i^2 \\ = 1-\sum_{i=1}^{J}p_i^2$$

For example,

suppose we have a binary variable that follows below.

$X = [FALSE,FALSE,TRUE,TRUE,….,TRUE,FALSE]$

![[table]](/2019/09/19/DecisionTree/image1.png)

Then, the Gini Impurity will be the followng by the definition,

$$1 - (\frac{8}{20})^2 - (\frac{12}{20})^2 = 0.48$$

What about the Gini Impurity for this binary variable?

![[table]](/2019/09/19/DecisionTree/image2.png)

Then, the Gini Impurity will be

$$1 - (\frac{1}{20})^2 - (\frac{19}{20})^2 = 0.095$$

Now, we see that the smaller Gini Impurity is, we find the better split point.

Therefore, we want to find the smaller Gini Impurity score as possible as we can at each step to find best split in the tree.

Now, let we have two binary variables and make table for each factors like the below,

$$Y = [FALSE, FALSE, … , TRUE] \\ X = [YES, YES, … , NO]$$

And, we want to predict $Y$ with $X$.

![[table]](/2019/09/19/DecisionTree/image3.png)

We will have only one stump in a Decision Tree for this table. The root node, which is the very top of the tree, will be the predictor, $X = [YES, YES, … , NO]$

The tree is something like..

![[table]](/2019/09/19/DecisionTree/Tree1.png)

The typical tree in real world is much more complicated with more nodes and splits, since they have many predictors.

This tree that has only one split is sometimes called a stump, which is often called a weak learner in Adaboost.

But, I will just call this as a tree for this example.

Then, the process of finding Gini Impurity is the below;

1) Find Conditional probability

For example, the Conditional probability given $X$ is

$$P(Y = FALSE |X = YES) = \frac{5}{7} \\ P(Y = TRUE | X = YES) = \frac{2}{7} \\ P(Y = FALSE | X = NO) = \frac{3}{13} \\ P(Y = TRUE | X = NO) = \frac{10}{13}$$

2) Find each Gini Index for the conditional probability

$$I_{Gini}(Y|X=YES) = 1 - (\frac{5}{7})^2 - (\frac{2}{7})^2 = 0.4081633 \\ I_{Gini}(Y|X=NO) = 1 - (\frac{3}{13})^2 - (\frac{10}{13})^2 = 0.3550296 $$

Then, we find a Gini Impurity score for a leaf node in this tree, which is the very bottom of the tree or the final classification.

![[table]](/2019/09/19/DecisionTree/Tree2.png)

3) Find Marginal probability

The margianl probabilty given $X$ is

$$P_X(X=YES) = \frac{7}{20} \\ P_X(X=NO) = \frac{13}{20}$$

Since the tree is splitted by $X$, we find the Gini Impurity of the total tree.

4) Multiply and add the Gini Index and marginal probability associated with the given probability respectively. Finally, we have Gini Impurity Score for a split.

Then, the Total Gini Impurity Score for this tree is,

$$I_{Gini}(Y|X=YES) \times P_X(X=YES) + I_{Gini}(Y|X=NO) \times P_X(X=NO) = \\ 0.4081633 \times \frac{7}{20} + 0.3550296 \times \frac{13}{20} = 0.3736264$$

Then, we eventually find the total Gini Impurity score for a tree.

![[table]](/2019/09/19/DecisionTree/Tree3.png)

In real world problem, we have many predictors and so many nodes, possibly many trees such in Random Forest.

With this process of finding Gini Impurity, we compare the Gini score and find the best split point of the best predictor with best splits.

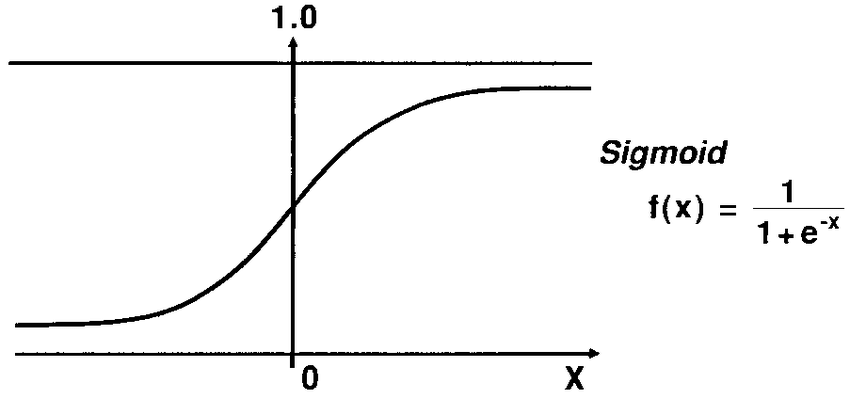

1.2 Entropy

Entropy is defined as below;

$$H(T) = -\sum_{i=1}^{J}p_ilog_2(p_i)$$

And the process of finding best split in a tree is to find the Information Gain.

The Information Gain is often called Kullback-Leiber divergence and defined as below;

$$IG(T,a) = H(T) - H(T|a)$$

where $IG(Y,X)$ is the Information Gain of Y given X, $H(Y)$ is the parent Entropy of Y, and $H(Y|X)$ is the children Entropy of Y given X, and the $H(Y|X)$ is often called cross-entropy.

The process of finding Information Gain is very similar to the Gini Score, but here we find the bigger value of IG.

I will use the same example problem as above.

For the above example,

$$Y = [FALSE, FALSE, … , TRUE] \\ X = [YES, YES, … , NO]$$

![[table]](/2019/09/19/DecisionTree/image3.png)

Then, as I have shown, find the conditional probability.

1) Find the conditional probability given $X$

$$P(Y = FALSE |X = YES) = \frac{5}{7} \\ P(Y = TRUE | X = YES) = \frac{2}{7} \\ P(Y = FALSE | X = NO) = \frac{3}{13} \\ P(Y = TRUE | X = NO) = \frac{10}{13}$$

2) Find the entropy given $X$

$$I_{Entropy}(Y|X=YES) = -\frac{5}{7}log_2(\frac{5}{7}) - \frac{2}{7}log_2(\frac{2}{7}) = 0.8631206\\ I_{Entropy}(Y|X=NO) = -\frac{3}{13}log_2(\frac{3}{13}) - \frac{10}{13}log_2(\frac{10}{13}) = 0.7793498 $$

3) Find the marginal probability

The margianl probabilty given $X$ is

$$P_X(X=YES) = \frac{7}{20} \\ P_X(X=NO) = \frac{13}{20}$$

4) Calculate the Total Entropy and Cross-Entropy

Total Entropy for a tree divided by $X$ is

$$-P_X(X=YES) \times log_2(P_X(X=YES))-P_X(X=NO) \times log_2(P_X(X=NO)) \\ = -\frac{7}{20}log_2(\frac{7}{20}) - \frac{13}{20}log_2(\frac{13}{20}) \\ = 0.9340681$$

Cross Entropy for a leaf node is

$$I_{Entropy}(Y|X=YES) \times P_X(X=YES) + I_{Entropy}(Y|X=NO) \times P_X(X=NO) \\ = 0.8631206 \times \frac{7}{20} + 0.7793498 \times \frac{13}{20} \\ = 0.8086696$$

5) Find Information Gain with Total Entropy and Cross Entropy

Information Gain = Total Entropy - Cross Entropy

$$= 0.9340681 - 0.8086696 = 0.1253985$$

For another example that shows why bigger Information Gain is better,

suppose we have the dataset following;

![[table]](/2019/09/19/DecisionTree/table.png)

It’s obviously better to split for Y given X than the above example, since this has only one mistake.

If we find the Information Gain of this data,

The Total Entropy for a tree divided by $X$ is 1, since this splits exactly the same number of target observations with 10 and 10.

$$-P_X(X=YES) \times log_2(P_X(X=YES))-P_X(X=NO) \times log_2(P_X(X=NO)) = \\ -\frac{10}{20}log_2(\frac{10}{20}) - \frac{10}{20}log_2(\frac{10}{20}) \\ = 0.5 + 0.5 = 1$$

The cross entropy for a leaf node is

$$I_{Entropy}(Y|X=YES) \times P_X(X=YES) + I_{Entropy}(Y|X=NO) \times P_X(X=NO) \\ = 0.234478$$

calculated by the same process of the above.

Then, the Information Gain is

$$1 - 0.234478 = 0.7655022$$

Therefore, we find the bigger Information Gain as possible as we can.

As a summary, Decision Tree Algorithm is..

1) Find the best split with the metric score for each splits and each predictors.

2) Compare the metric score with every splits and other predictors and find the best split and best predictors.

3) The best predictors that has the best metric score will be the roof node, and keep building a tree with the metric scores.

4) The very bottom of the tree, which is the leaf node, will be our final classification.

If we have continuous predictors, there is no split point like “YES” or “NO”. Therefore,

1) we sort the data associated with the numerical predictors,

2) calculate the average for all adjacent indexes of the numerical predictor,

find the Gini Impurity for all the adjacent average value,

and compare the Gini Impurity to find the best split point of adjacent average of the numerical predictors.

Below is the Implementation in R. I used iris dataset, and a dataset that I created.

Some of codes are skipped, and you can refer to my Gibhub website to see full algorithm.

1 | #removing one of the classes of the target variable, virginica in Iris Dataset. |

![[tree1]](/2019/09/19/DecisionTree/IntroductionDecisionTree-1.png)

This is the prediction by Decision Tree built in R.

![[tree1]](/2019/09/19/DecisionTree/IntroductionDecisionTree-2.png)

Here is the Decision Tree algorithm with the introduced two metrics that I built.

1 | #average function to create adjacent average between predictor indexes |

1 | #by Entropy metric, we want to find the maximum entropy score |

![[tree1]](/2019/09/19/DecisionTree/Prediction-1.png)